Google Document AI: Streamlining document Management

The widespread use of the Internet and the blossoming of crowdsourcing techniques have empowered Google’s AI in various fields. Google’s deep learning techniques have helped shape our daily routine behind the scenes, from the simple speech-to-text followed by the human-language understanding of a virtual assistant to the deep network classification of images that helped users with their search.

- Introduction

- What is Google Document AI?

- Getting Started with Document AI

- Types of Data Supported by Google Document AI

- Example Uses of Google Document AI

- Applications of Google Document AI

- Technology Behind Google Document AI

- Competition and Alternatives

- Conclusion

However, one may not be aware that Google offers various hardware and software products as services that customers could purchase for multiple needs. For instance, the Google Vision API allows users to adopt state-of-the-art vision methods for object detection, segmentation, and optical character recognition (OCR) tasks. Hardware such as GPUs and machines are also available for companies to rent, train their customized models, and set up fast and computationally efficient servers.

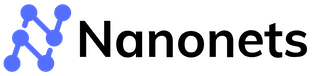

One primary service that may have been overlooked due to Google's kaleidoscopic range of services is Document AI. Like the Google Vision API, Google Document AI utilizes cutting-edge methods to extract information from piles of paperwork. This article dives into a description and technology of Google Document AI, followed by short explanations of its range of capabilities and applications and some of its competitors, which may be helpful in different scenarios.

What is Google Document AI?

Google Document AI stands out because it can automate document data processing at scale. It's a product of Google's decades of AI research.

Besides providing a generic document analysis and retrieval, Google Document AI also supports specific formats such as receipts, invoices, payslips, and specific forms that are often processed in large batches by organizations.

Getting Started with Document AI

One may head to the Google Document AI page and test out one of their documents or one of your own to see the quality of extraction. The output will be in the form of JSON format that could be downloaded and analyzed.

Finally, in addition to the automatic approach to document retrieval, Google Document AI now incorporates a human-in-the-loop concept that allows users to suggest mistakes during retrieval. This process will be incorporated into learning, constantly improving the AI’s document retrieval ability.

Types of Data Supported by Google Document AI

Text

The main goal of Google Document AI is to extract the text within a document. This would ease the process of scanning through forms requiring significant human effort. Besides the text, Google Document AI also determines where there are line breaks and sentence breaks. This allows users to further personalize and process the JSON output after retrieving the necessary information from Google Document AI.

For example, depending on the industry/purpose of utilizing the services, one may perform further data analysis or provide responses to forms after extracting the necessary information from a PDF document. The text could also be typed or handwritten, providing more flexibility, which Google Document AI can handle.

Document overview information

Google Document AI also provides some information regarding the given document, including the orientation of the pages, anchors, and the languages detected from the document (which may contain more than one). This information could be helpful when tidying or organizing the batches of documents. For instance, the documents can be automatically rotated to portrait for further investigation/OCR scanning or separated into piles depending on the language of a particular response.

Key-value Pairs and Table Extractions

Besides text and overview information about a document, one of the most essential features that need to be extracted from documents is data. Manual data extraction is a repetitive process that could be daunting and error-prone, not to mention the difficulties when documents are scanned as images and not text.

In most cases, data is not stored in paragraphs or sentences but in tabular forms and key-value pairs (KVPs), which are essentially two linked data items, key and value, where the key is used as a unique identifier for the value (i.e., Name: John or Age: 19). Specifically, when dealing with documents such as forms, these data types exist more than often, and text extraction will simply not be enough. In addition, unlike tables, KVPs could frequently exist in unknown formats and are usually partially hand-written in forms. Even with state-of-the-art text extraction, it may still be challenging to determine KVPs with only text and not consider the features on paper (e.g., bounding boxes, lines).

Luckily, Google Document AI also performs extractions from the two data types mentioned above, allowing users to retrieve text clean and organized and automatically obtain data from underlying data structures for further use.

Example Uses of Google Document AI

Prerequisites

To use any services provided by the Google Vision API, one must configure the Google Cloud Console and perform a series of authentication steps. The following is a step-by-step overview of how to set up the entire Vision API service.

- Create a Project in Google Cloud Console: A project needs to be created in order to begin using any Vision service. The project organizes resources such as collaborators, APIs, and pricing information.

- Enable Billing: To enable the vision API, you must first enable billing for your project. The details of pricing will be addressed in later sections.

- Enable Vision API

- Create Service Account: Create a service account and link to the project created, then create a service account key. The key will be output and downloaded as a JSON file onto your computer.

- Set Up Environment Variable GOOGLE_APPLICATION_CREDENTIALS

A more detailed procedure of the steps above can be found in the official documentation given by Google Cloud here:

https://cloud.google.com/vision/docs/quickstart-client-libraries

Code

Document AI can be separated into text extraction and understanding the text. For text extraction, one can refer to the code for detecting text in PDFs:

def async_detect_document(gcs_source_uri, gcs_destination_uri):

"""OCR with PDF/TIFF as source files on GCS"""

import json

import re

from google.cloud import vision

from google.cloud import storage

# Supported mime_types are: 'application/pdf' and 'image/tiff'

mime_type = 'application/pdf'

# How many pages should be grouped into each json output file.

batch_size = 2

client = vision.ImageAnnotatorClient()

feature = vision.Feature(

type_=vision.Feature.Type.DOCUMENT_TEXT_DETECTION)

gcs_source = vision.GcsSource(uri=gcs_source_uri)

input_config = vision.InputConfig(

gcs_source=gcs_source, mime_type=mime_type)

gcs_destination = vision.GcsDestination(uri=gcs_destination_uri)

output_config = vision.OutputConfig(

gcs_destination=gcs_destination, batch_size=batch_size)

async_request = vision.AsyncAnnotateFileRequest(

features=[feature], input_config=input_config,

output_config=output_config)

operation = client.async_batch_annotate_files(

requests=[async_request])

print('Waiting for the operation to finish.')

operation.result(timeout=420)

# Once the request has completed and the output has been

# written to GCS, we can list all the output files.

storage_client = storage.Client()

match = re.match(r'gs://([^/]+)/(.+)', gcs_destination_uri)

bucket_name = match.group(1)

prefix = match.group(2)

bucket = storage_client.get_bucket(bucket_name)

# List objects with the given prefix.

blob_list = list(bucket.list_blobs(prefix=prefix))

print('Output files:')

for blob in blob_list:

print(blob.name)

# Process the first output file from GCS.

# Since we specified batch_size=2, the first response contains

# the first two pages of the input file.

output = blob_list[0]

json_string = output.download_as_string()

response = json.loads(json_string)

# The actual response for the first page of the input file.

first_page_response = response['responses'][0]

annotation = first_page_response['fullTextAnnotation']

# Here we print the full text from the first page.

# The response contains more information:

# annotation/pages/blocks/paragraphs/words/symbols

# including confidence scores and bounding boxes

print('Full text:\n')

print(annotation['text'])If the text is not in PDF but in image format, one may also refer to the cloud vision API for text detection. Finally, one may use the NLP APIs provided by Google to extract meanings from text.

All the details regarding the codes can be found here.

Applications of Google Document AI

There is a high demand for Google Document AI across various industries and for personal use. We have divided the applications into business and personal use, and provided some Google Document AI examples for each category.

Personal

While document reading automation is mainly used for large-scale production to reduce labor costs, fast and accurate data, and text extraction can also improve personal routine and organization.

- ID Scans and Data Conversion: Personal IDs and passports are often stored in PDF files and scanned documents across websites. They contain various data, particularly KVPs (e.g., given name to date of birth), which are often needed for online applications, but we have to manually find and type in the identical information again and again. Proper data extraction from PDFs can quickly convert data into machine-understandable text. Processes like filling in forms will become trivial tasks for numerous programs, and the only manual effort left would be quick scan-throughs for double-checking.

- Invoice Data Extraction: Budgeting is a crucial aspect of our daily lives. While the development of spreadsheets has already simplified the tasks, automatic data extraction still remains a process that, if empowered by machines, eases up much of the budgeting process. Users can quickly perform analysis based on the results of Google Document AI and determine/find abnormal or unaffordable purchases.

Business

Business corporations and large organizations deal with thousands of paperwork with similar formats daily – big banks receive numerous identical applications, and research teams have to analyse piles of forms to conduct statistical analysis. Therefore, automation of the initial step of extracting data from documents significantly reduces the redundancy of human resources and allows workers to focus on analyzing data and reviewing applications instead of keying in information.

- Payment Reconciliation: Payment Reconciliation compares bank statements against your accounting to ensure the amounts match correctly. Reconciliation may be reasonably straightforward for small firms where their clients and cash flows are from fewer sources and banks. However, as the company scale expands and money inflows and outflows become more diverse, this process will soon become daunting and labor-intensive, exponentially increasing the probability of error.

Therefore, numerous automated methods were proposed to alleviate the pipeline from human efforts. The initial stage of payment reconciliation is data extraction from documents, which can be challenging for a company with considerable size and various sectors. Google Document AI can alleviate this process and allow employees to focus on faulty data and explore potential fraudulent events in the cash flow. - Statistical Analysis: Corporations and organizations require feedback from customers, citizens, or even experiment participants to improve their products/services and planning. To evaluate feedback comprehensively, statistical analysis is often needed. However, the survey data may exist in numerous formats or be hidden between typed or handwritten text. Google Document AI could ease the process by pointing out obvious data from documents in batches, alleviating the process of finding valuable methods and ultimately increasing efficiency.

Technology Behind Google Document AI

The proprietary AI technology adopted by Google Document AI falls within Computer vision and Natural Language Processing (NLP). Computer vision tries to allow machines to understand images, whereas NLP interprets helpful information in a series of words or sentences. Essentially, Google Document AI leverages computer vision technology, particularly optical character recognition, to detect words/phrases from a given PDF and then use these words and phrases as inputs to an NLP network to find essential meanings behind them. The following briefly describes the basic techniques used in these fields.

Computer vision

Since the development of deep learning, traditional image processing methods to obtain/detect features have been overthrown due to large accuracy discrepancies. Current techniques in computer vision are now mainly built upon convolutional neural networks (CNNs).

CNNs are specific types of neural networks that utilize a traditional image and signal processing tool: kernels. The kernels are small matrices that hover over an image to perform dot products, which should allow certain features to be selected. However, one main difference between traditional kernels and kernels in CNNs is that the weights/constants within kernels are pre-set in conventional image processing but learned in CNNs. Presetting the kernel constants allows machines to perform only specific and straightforward tasks, such as line and corner detections, but restricts the performance of tasks, such as text detection. This is because the features of different texts are too complicated. Therefore, the constants of the kernels that could portray the relationship between features and actual text are not accessible to be determined through manual effort.

An interesting fact worth noting is that the concept of CNN came into existence decades agobut has only been used recently with the exponential rise of computational hardware, which has made deep learning processes feasible. Nowadays, state-of-the-art approaches to vision tasks, from classification and segmentation to anomaly detection and content generation, revolve around CNNs.

In simple words, text, key-value pairs, and tables are all features from the PDF that could be detected via the Google Document AI with the help of CNNs.

Natural Language Processing

Like the path of computer vision’s recent progress, deep learning has also shed light on a long ongoing research field in computer science: NLP. NLP is the process of understanding the words or series of words combined in a paragraph to suggest meanings. This task is considered at many times to be even more complicated than understanding images, as even the exact phrase could be interpreted differently under different contexts.

In the past few years, research has focused on a type of neural network, namely long-short-term memory (LSTM), which determines the output of the next event based on the current input and previous input along the time-series data. Recently, however, the focus has shifted slightly to a different family of networks called transformers. Transformers focus on learning the attention of a series of events. In this case, particular vocabularies within a sentence may deserve more attention than others despite existing longer or shorter than the current word you are investigating. The results of transformers vastly outperform previous networks in numerous tasks, including word navigation and understanding semantics.

Competition and Alternatives

Although Google Document AI has achieved stunning success and partners with numerous industries in delivering fast and accurate document analyses, several alternatives provide similar services. The following are several competitors with Google’s Document AI service:

Amazon Textract

Similar to Google, Amazon Web Service has been serving large corporations on the internet for a long time now, allowing them to gain long years of AI research experience to develop the Amazon Textract engine that performs similarly to Google’s Document AI. Amazon Textract goes beyond just words and text, but also the meanings behind text as well as features such as table extraction. Security laws also bind the processor, and users can quickly gain insights into its security processes.

ABBYY and Kofax

On the other hand, there are also other solutions such as ABBYY and KOFAX that are dedicated to PDF OCR. Their products include friendly UIs for PDF OCR reading. However, their non-engineering nature makes it more difficult to incorporate directly into other programs to form a fully automated process. In addition, their services are OCR-only, which means data extraction and more advanced deep learning techniques to understand the documents are not offered fully.

Nanonets™

Nanonets™ is a company that specializes in OCRs across all kinds of documents - from receipts and statements to invoices. Their deep learning models are trained across hundreds of thousands of specific targets, allowing them to perform exceptionally well on specialized tasks and generalizable across unseen documents. Non-engineers can adopt their friendly user interface and computer scientists can easily use their APIs and incorporate the OCR capabilities into other tasks for PDF document reading.

Google Document AI Pricing

Google Document AI provides a range of processors with pricing based on the number of pages per month. For converting text into digital format, the cost is $1.50 per 1,000 pages for the first 5 million pages and $0.60 per 1,000 pages thereafter, with additional OCR add-ons costing $6 per 1,000 pages. If you need to extract entities such as key-value pairs or table data, the cost is $30 per 1,000 pages for the first million pages and $20 per 1,000 pages beyond that.

For document classification, the pricing ranges from $3 to $25 per 1,000 pages, depending on your chosen processor. Hosting custom processors is billed at $0.05 per hour for each deployed version. There are also legacy processors with per-document fees that vary according to the document type, such as invoice, expense, or bank statement, with pricing ranging from $0.05 to $0.30. Fine-tuning generative AI models may also incur additional costs.

Nanonets, on the other hand, offers a more straightforward and adaptable pricing model. It includes a Starter plan with pay-as-you-go pricing ($0.30 per page after the first 500 free pages), a Pro plan subscription ($999/month/workflow with 10,000 pages included), and a custom Enterprise plan with additional features and support. This tiered structure allows you to choose the plan that best suits your needs, making it a flexible and user-friendly option.

Conclusion

Google Document AI provides a powerful suite of tools for document processing, but it may not be the optimal solution for every business. Accuracy, flexibility, supported document types, and pricing should be carefully considered when choosing a document processing platform.

As the landscape of AI document processing continues to evolve, businesses should evaluate their specific needs and explore alternatives that can provide the right balance of features, performance, and cost-effectiveness. By selecting a solution that aligns with their unique requirements, organizations can successfully automate their document processing workflows and realize the full potential of their data.